In order to perform multi-modal fusion between images obtained from a light intensifier (LI) and IR (infra-red) images, hence providing multi-sources information to the pilot, it is necessary to perform registration between the two modalities. They reflect different properties of the scene, so the selected approach is based on the geometric information and the edges of each image.

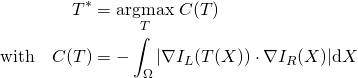

The goal is to find the transformation ![]() that transfers the IL image into the frame of reference of the IR image by minimizing the following energy:

that transfers the IL image into the frame of reference of the IR image by minimizing the following energy:

(1)

This metric is based on the edges of each image, and it only takes into account the edges that occur in both modalitites, which makes it insensitive to outliers.

Thanks to a gradient descent based optimization scheme, it is possible to quickly evaluate to optimal transformation between the two modalities.

Registration results on real data

Related papers:

C. Sutour, J.-F. Aujol, C.-A. Deledalle et B.D. De-Senneville. Edge-based multi-modal registration and application for night vision devices. Journal of Mathematical Imaging and Vision, pages 1–20, 2015.